Convex

Visit ProjectIntrospect and query your apps deployed to Convex.

Visit ProjectCategory

Tags

what is Convex MCP Server?

Convex MCP Server is a tool for AI agents to interact with applications deployed on the Convex backend platform using the Model Context Protocol (MCP). It allows agents to introspect databases, query data, execute functions, and manipulate environment variables.

How to use Convex MCP Server?

1. Install Convex MCP Server in Cursor version 0.47 or later:

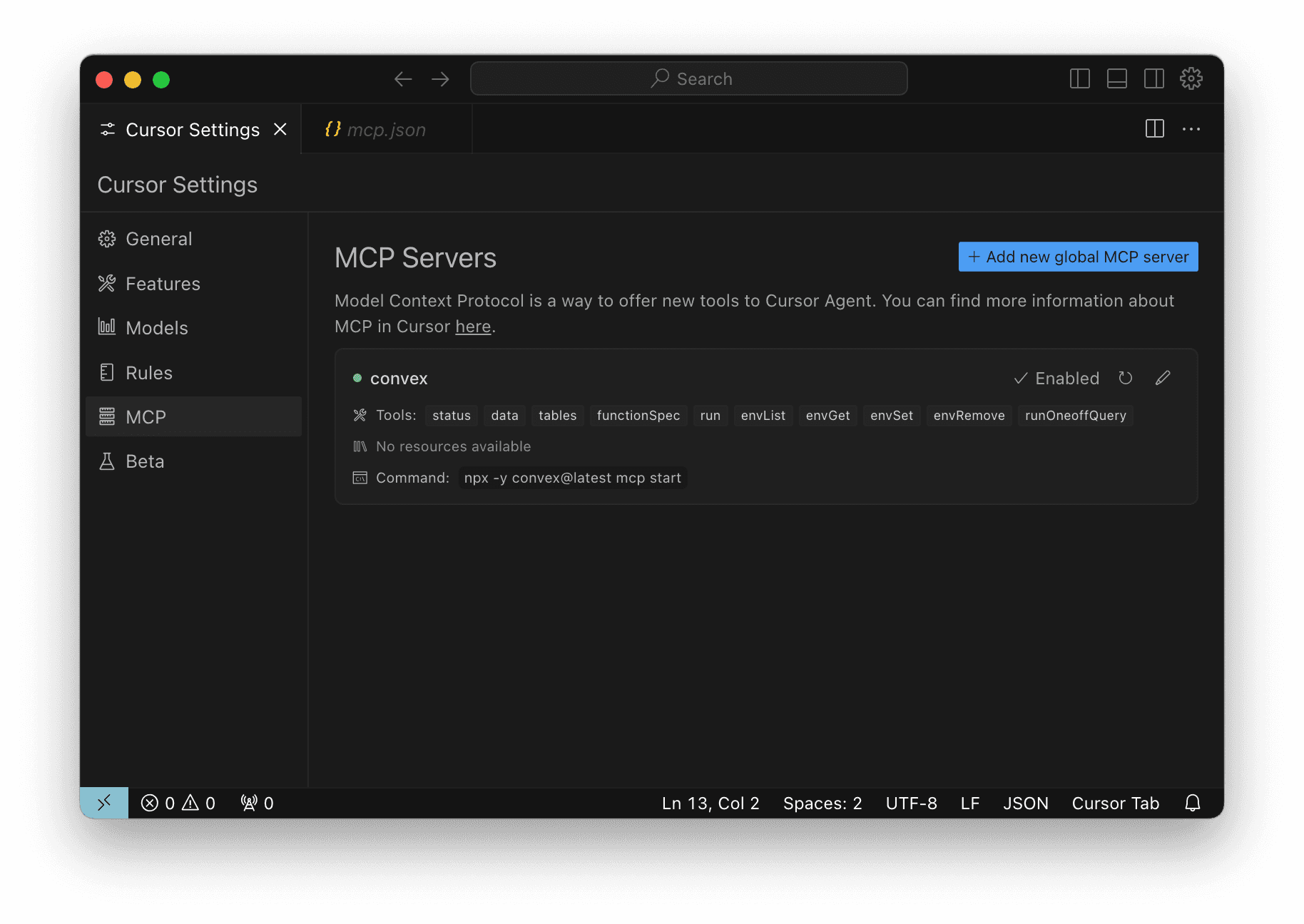

- Go to "Cursor Settings > MCP"

- Add a new global MCP server with the configuration:

```json

{

"mcpServers": {

"convex": {

"command": "npx",

"args": [

"-y",

"convex@latest",

"mcp",

"start"

]

}

}

}

```

2. Enable the MCP server inursor and let it access your Convex deployments.

Key features of Convex MCP Server?

- **Deployment introspection**: List and select Convex deployments (production/dev/preview)

- **Table inspection**: View table schemas (declared and inferred) and paginate through documents

- **Query execution**: Run sandboxed one-off queries against the database

- **Function interaction**: List and execute deployed functions (with type checking)

- **Environment variables**: Manage deployment environment variables

Use cases of Convex MCP Server?

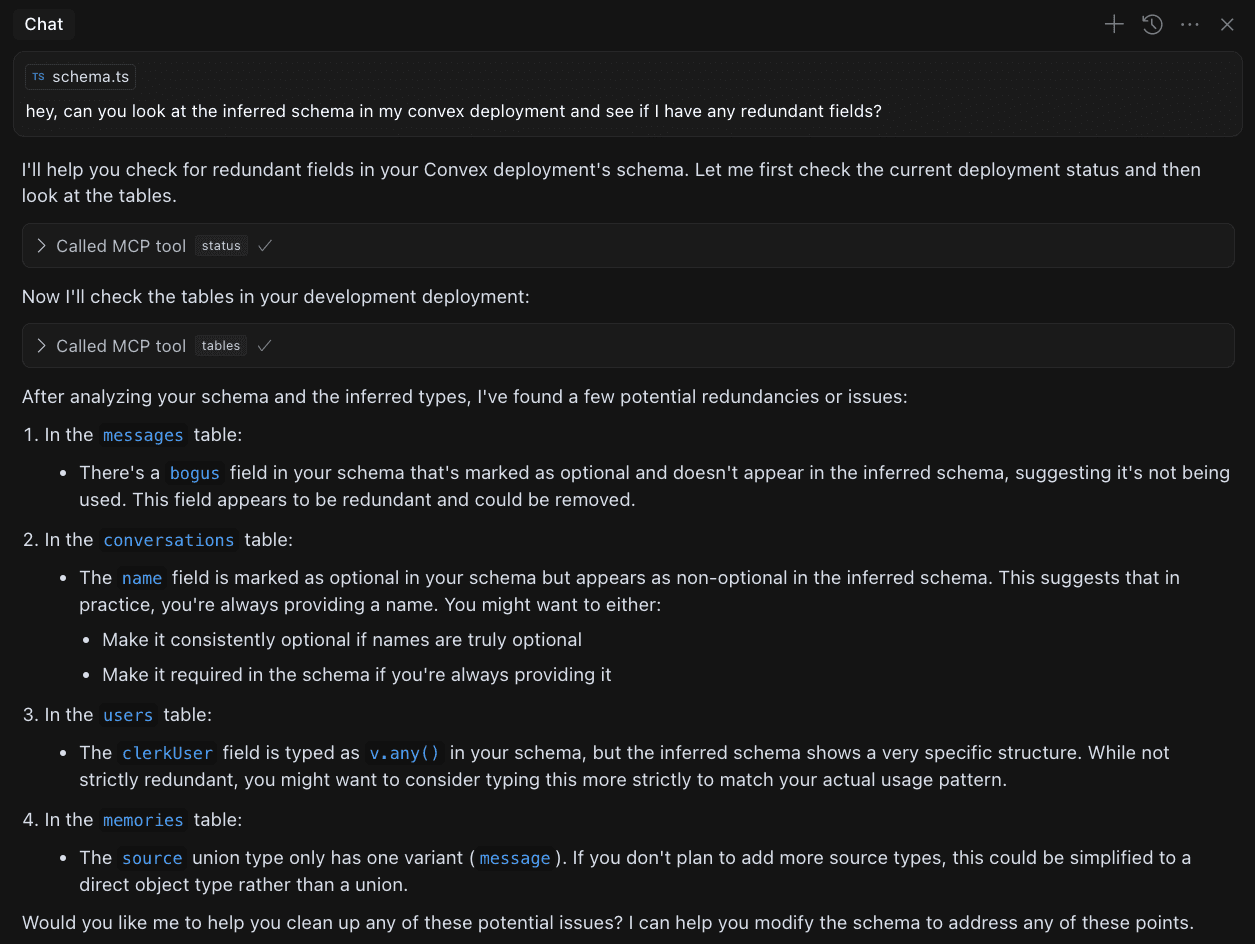

1. AI agents analyzing deployment data to suggest schema improvements

2. Automated troubleshooting of production issues

3. Data exploration through ad-hoc one-off queries

4. Agent-driven quality assurance testing

5. Automated deployment health checks

FAQ from Convex MCP Server?

- What is MCP?

> MCP (Model Context Protocol) is a standardized way for AI agents to interact with different tools and APIs.

- Which agents are supported?

> Currently supports Cursor 0.47+ on macOS Sequoia. Planning to add support for Windsurf, Claude Desktop and other agents.

- Why a global instead of project-specific MCP server?

> To maximize compatibility and simplify setup with current agent implementations.

Convex MCP Server

===============

PatternsPerspectivesWalkthroughsChefLocal-FirstAI

PatternsPerspectivesWalkthroughsChefLocal-FirstAI

4 months ago

Convex MCP Server

Hey everyone! We just shipped Convex 1.19.5, which includes a beta MCP server for Convex. Give it a spin and send us all your feedback.

So, what's an MCP server? The Model Context Protocol (MCP) is a standardized way for AI agents to interact with different tools. For example, there are MCP servers for reading from the local filesystem, searching the web, and even controlling a browser.

Convex's MCP server supports introspecting a deployment's tables and functions, calling functions, and reading and writing data. Agents can even write code for oneoff queries: Since Convex queries are fully sandboxed and can't write to the database, it's safe to let the agent go wild!

Models are really good at writing code, so exposing a tool that lets them safely run code makes them really powerful. Here's a demo of an agent writing a JavaScript query to answer ad-hoc questions about a chat app's data:

Installation (Cursor)

On Cursor version 0.47, go to "Cursor Settings > MCP", and click on "Add new global MCP server". Then, fill out this "convex" section in the mcp.json:

1{

2 "mcpServers": {

3 "convex": {

4 "command": "npx",

5 "args": [

6 "-y",

7 "convex@latest",

8 "mcp",

9 "start"

10 ]

11 }

12 }

13}

14

After adding the server, ensure the "convex" server is enabled and lit up green (you may need to click "Disabled" to enable it). Here are what my Cursor Settings look like:

If you're running into issues, confirm you're using Cursor version 0.47.5 or later (check under "Cursor > About Cursor" on macOS).

Other agents

For the MCP server beta, we've only tested it with Cursor on macOS Sequoia. We'll be testing more agents (e.g. Windsurf and Claude Desktop) on more operating systems in the future. But, in the meantime, configuring these tools with the same npx -y convex@latest mcp start command should work. Please let us know in Discord if you run into any issues setting it up.

Tools

Let's walk through each of the tools on my Claude at Home project. It's a simple ChatGPT clone that I use as a sandbox for playing with different ideas for tool use.

Deployments

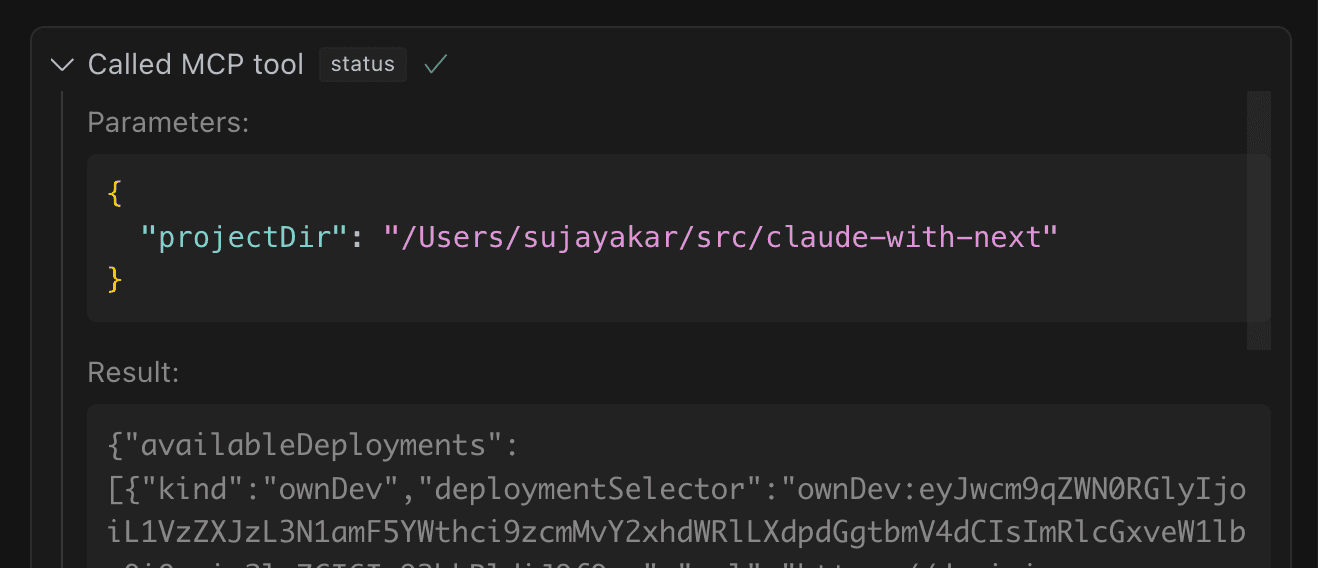

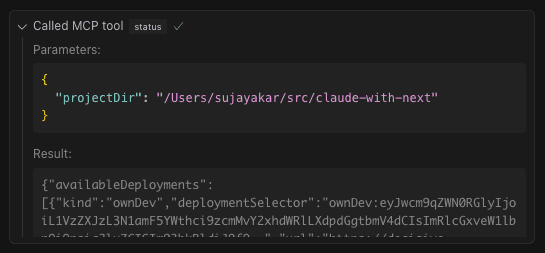

Each app deployed to Convex corresponds to an project, which can have multiple deployments: a single production deployment, a development deployment per team member, and potentially many preview deployments. The status tool lets the model query which deployments it has access to and gives it a "deployment selector" it can pass to subsequent tool calls:

Tables

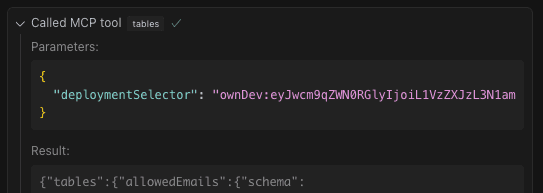

The model can list all of a deployments tables with the tables tool. In addition to listing the table's names, this tool returns each table's declared schema (if present) and its inferred schema. Convex automatically tracks the shape of data in your deployment, even if you haven't declared a schema yet, and agents can use this to suggest improvements to your schema. This can be useful for filling out v.any() types in your schema or finding unused optional fields:

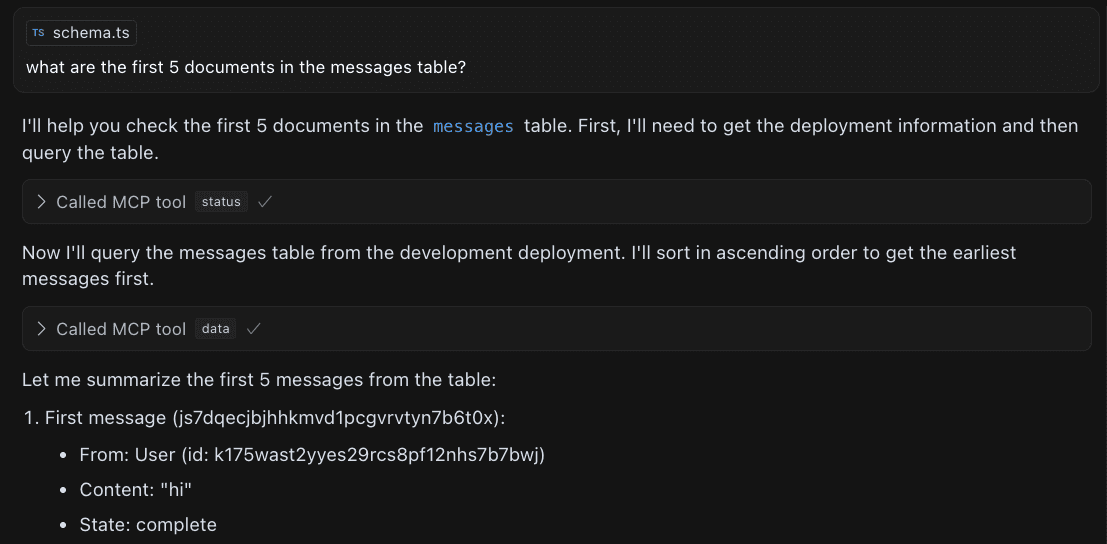

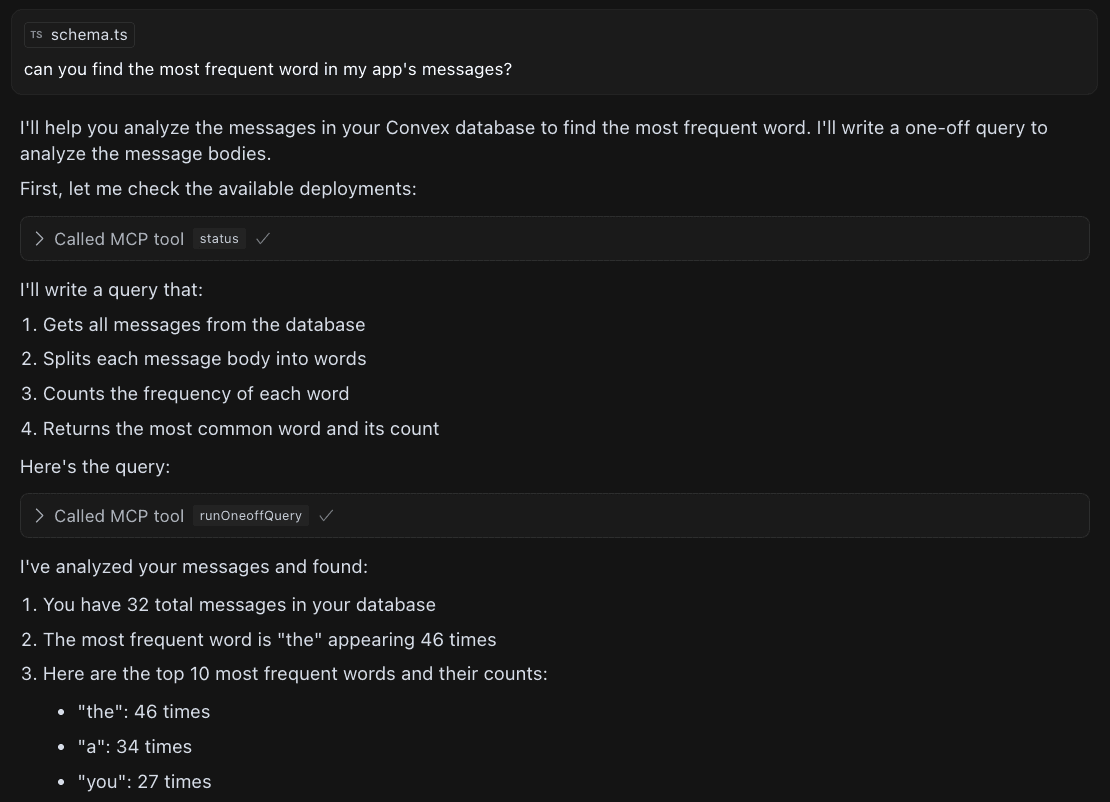

Once it has listed table metadata, the agent can use the data tool to paginate through a table's documents:

One of our principles for effective autonomous coding is to express as much as possible in code. So, the runOneoffQuery tool lets the model just write code for querying a deployment's data. This code is fully sandboxed and can't modify the database, so it can't do any harm! Here's an example of it writing a one-off query to find the most frequently occurring word in my chat app's messages:

Functions

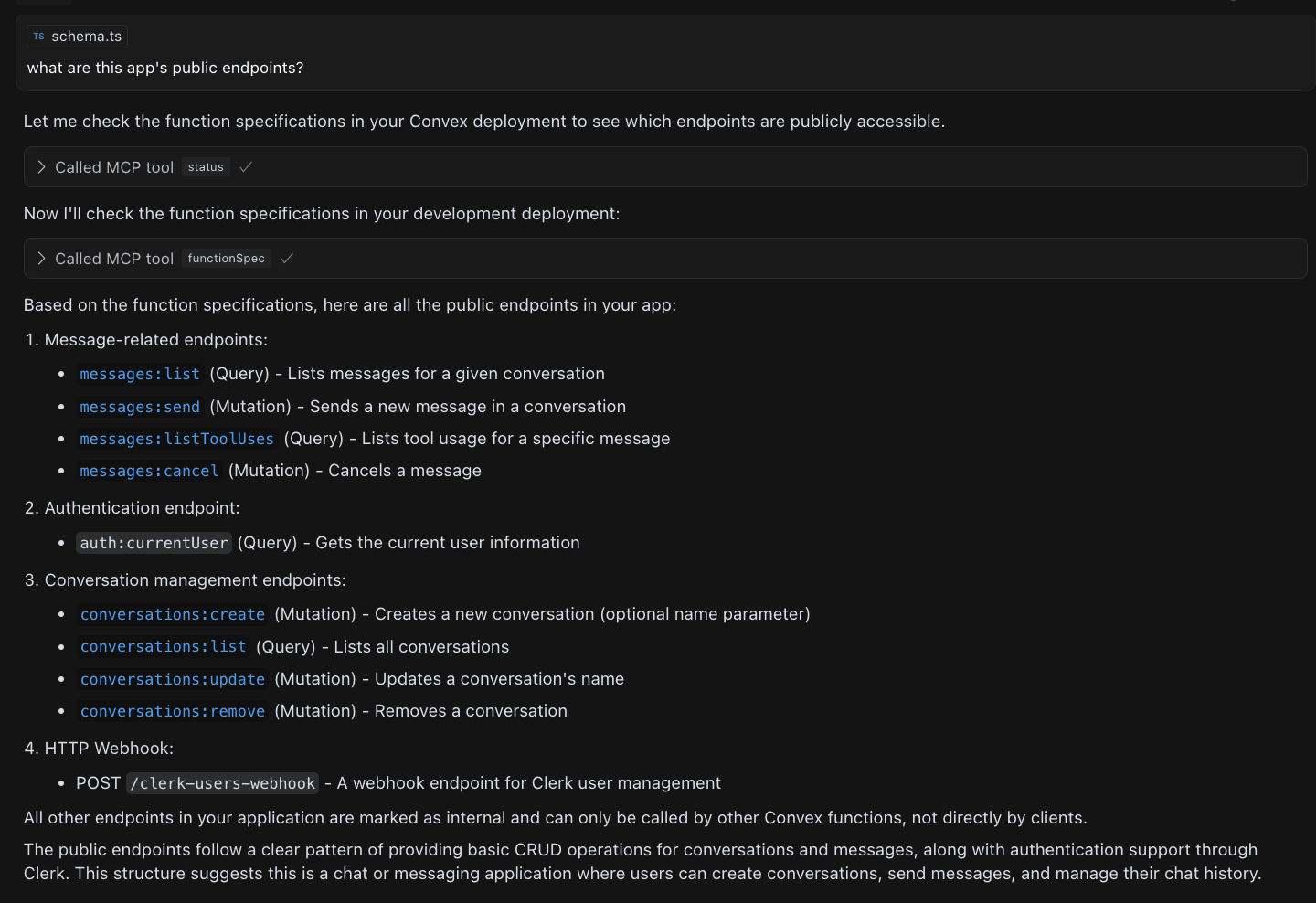

The functionSpec tool similarly lets the model see all of the functions deployed along with their types and visibility. Even though the agent could figure this out by reading through all of the code in the convex/ directory, it's a lot more efficient to get the API in a single tool call:

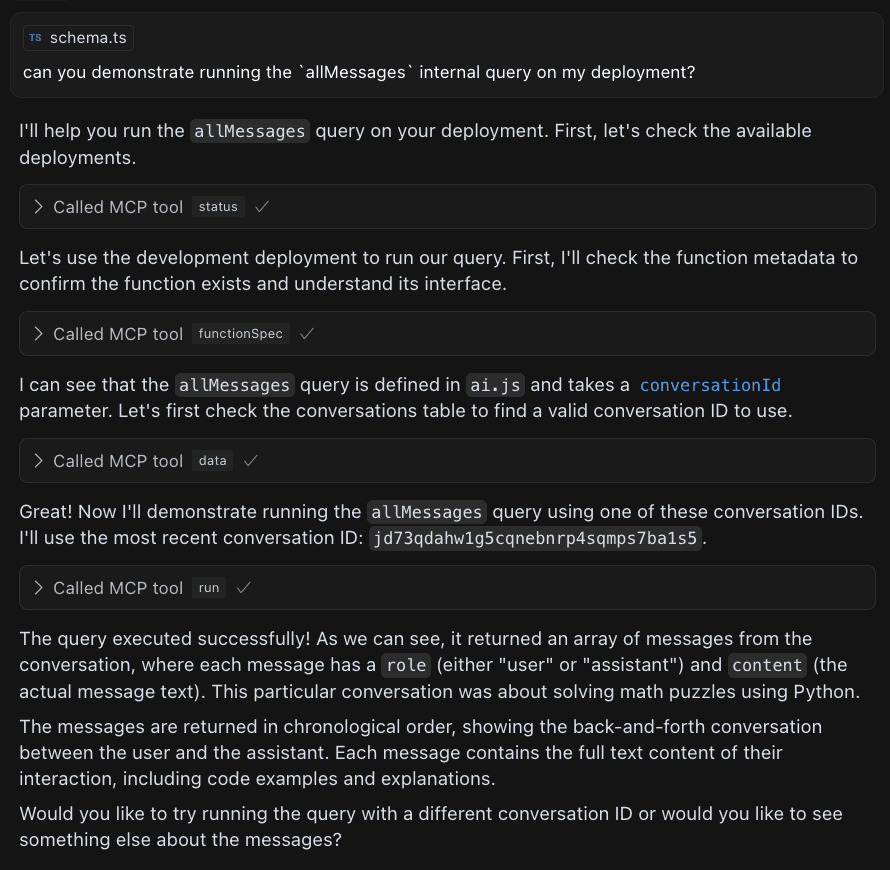

Just like with tables, after reading functions' metadata, the agent can use the run tool to actually run them. In this example, I ask the agent to demonstrate running an internal query, and it intelligently looks at the function's interface, realizes it needs a conversation ID to pass in, and then uses the data tool to pick an example before proceeding:

Environment variables

These aren't as exciting, but the envList, envGet, envSet, and envRemove tools let the agent manipulate a deployment's environment variables.

Implementation notes

MCP servers and their clients are still pretty early, and we're curious to see what patterns emerge over time. Here are the design decisions we encountered and the workarounds we needed to get everything working.

Global vs. project server?

Cursor just recently added "project-specific MCP configuration," which allows configuring a different set of MCP servers for different project directories. Most other tools, like Claude Desktop and Windsurf, currently only support a single set of globally configured MCP servers.

For broad compatibility, we chose to use a global MCP server. This means that all Convex projects on a computer talk to the same MCP server. This MCP server isn't aware of where Convex projects live, so tool calls need to pass their project directory in as arguments.

However, we also observed during the Fullstack-Bench experiments that Cursor Agent would sometimes get confused when having to pass around the current working directory, especially when it got "frustrated" during a debugging loop.

To avoid this, our tool protocol has the model only pass in the project directory once during an initial status call:

The status call returns an opaque "deployment selector" that has the project directory baked in (and obfuscated so the agent doesn't get confused by it). Then, the agent just needs to pass this selector to subsequent calls untouched. If coding models are good at one thing, it's copy pasting :)

Transport and protocol

MCP supports a rich set of interfaces (Resources, Prompts, Tools, Sampling, Roots, and Notifications), but Cursor and Windsurf currently only support tools. So, Convex's MCP server currently exposes all of its functionality through tools alone, even if concepts like Roots and Resources would make more sense.

To keep things simple, we just use the stdio transport protocol on a locally running server.

We also only use string arguments for all MCP tools. Despite MCP supporting JSON schema with rich structured types, we noticed that the MCP Inspector Tool only supports string arguments. Even when using Cursor Agent, nested JSON objects were failing JSON schema validation, so it was simpler just to get everything working with strings.

Conclusion

It's really fun to get surprised by how much agents can do with access to a few tools. When writing this article, I was surprised how little prompting Cursor Agent needed to figure out how to plan and sequence multiple tool calls.

We'll be adding more tools, testing more platforms, and curating more workflows over time. Give npx convex mcp a shot, let us know what works and what doesn't, and show us all the cool workflows you come up with!

Build in minutes, scale forever.

Convex is the backend platform with everything you need to build your full-stack AI project. Cloud functions, a database, file storage, scheduling, workflow, vector search, and realtime updates fit together seamlessly.

Get started

Join the Convex Community

Ask the team questions, learn from others, and stay up-to-date on the latest with Convex.

Share this article

Read next

AI Agents (and humans) do better with good abstractions

Chef by Convex builds real full-stack apps in one prompt—Notion, Slack, and more. It works because Convex’s abstractions are simple enough for both humans and AI to use. Built-in features and plug-and-play components let developers skip boilerplate and ship fast.

Convex Evals: Behind the scenes of AI coding with Convex

AI coding is here: The most productive developers are leveraging AI to speed up their workflows. This ranges from asking models questions about system design to letting AI take the driver's seat with tools like Cursor Composer.

Coding agents can do more autonomously when they write code that has tight, automatic feedback loops; use systems that express everything in standard, procedural code; and have access to strong, foolproof abstractions.

We use third-party cookies to understand how people interact with our site.

See our Privacy Policy to learn more.

Decline Accept

Sync up on the latest fromConvex.

DocsDashboardGitHubDiscordTwitterJobsLegal

©2025 Convex, Inc.